The Decline of the Average Mind

Things fall apart

Barry Sanders

The average: what an odd word. It looks like it might have come from aver—to speak the truth. But it does not. It is a feudal term that first appears in the Domesday Book (1085) and refers to a day’s work that an English peasant rendered to the King in payment of tenancy. In the late Middle Ages the word migrated into the world of shipping. Average becomes conflated with the French avarie, “the cost of damaged shipboard property,” which by design, shippers assigned among all the owners of goods, in proportion to their interests. Added to the total price of the product, it resulted in a calculation called the “average cost.” By 1770, phrases like the following routinely appear: “the average price of corn.” Not until the early 1830s, however, does average take on its more contemporary and perhaps more democratic meaning: “to estimate by dividing the aggregate of a series by the number of units.”

The average was not always something arithmetically derived. In the ancient world, philosophers urged citizens, in all their endeavors, to seek a space they called The Happy Mean or The Golden Mean. For Aristotle, the pursuit of the via media demands the highest order of virtue and character insofar as individuals must continually find the balance point between extremes for themselves by evaluating and assessing each situation.

For centuries, people knew their place in the cosmos and they clung to it. They did not need Plato or Aristotle to nudge them one way or another. In the philosophical template known as the Great Chain of Being, human beings hung midway between angels and animals, with all the inanimate objects below. God occupied the top link, the devil crouched at the bottom. People could aspire to the angelic life or descend to the level of animals. They might reign as kings or serve as serfs. But no matter their desires or station, they retained their medial link in the chain. One of the most persistent ideas in history, the Chain of Being, directed human affairs from the time of the Ancient Greeks to the early eighteenth century. Again, the midway, the average, provided the home for every person.

What makes the average—a coveted spot for so many centuries—turn into such a term of derogation? What critical social changes had to take place for that kind of radical shift to occur? I give only the bare outline here, for the story is a long and detailed one. But one thing seems fairly clear: the 18th century begins to mathematize everyday experience to such a degree that words like average, common, and mean—absolutely positive or at worst neutral terms as the century opens—begin to take on fairly negative meanings. Rather than a goal one strives to attain, the average becomes an insult one hopes to avoid: a person is consigned, doomed even, to be average. Today, unless one is talking about cholesterol levels or blood pressure, there is no way to compliment a person using the word average. Even if one describes the other person as “well above average,” the implication of being just average casts its very dark shadow over the compliment.

The degradation of the center begins in the 18th century with the introduction of a most powerful mathematical instrument, statistical enumeration. Among other things, statistics made the social scientist a possibility and state control a certainty. (While the calculus had not yet been formulated, statistics nonetheless gains its foothold in policy decisions here.) For the first time, while he remained sitting at a desk (a bureau), an administrator (a bureaucrat) of the state could send workers into the field to tally various aspects of the general population—earnings, living conditions, marital status and, towards the end of the century, skin color. As numbers began to assume supreme importance, flesh receded into the background. The average took on mathematical certainty; it became the baseline for making judgments about people’s behavior as they moved toward excess or fell away into deficiency.

This is the mathematical mentality that Jonathan Swift attacks in “A Modest Proposal.” His recipes for carving up and cooking babies, with exacting measurements of size and temperature, satirize, in part, the way statistical thinking reduces living creatures to hat size, height, longevity, along with an infinite list of other variables that 18th-century bureaucrats counted, for the first time, as data. Swift saw the coming nightmare of a world circumscribed by the power of this new authority, the bureaucrat. But he could not foresee that the erosion of human essence would prepare the island’s subjects for the kind of repetitive work they would be required to perform in the Industrial Revolution of the next century. He could not see that the former would serve as a preparation for the efficient implementation of the latter. What passed through those factory doors were no longer people but average workers. One can have sympathy for a person, but not for an average, composite person. No single individual died in Vietnam. Instead, Americans puzzled over a new phenomenon called “body counts.”

As the 18th century laid its mathematical grid over experience, the daily mess and unpredictability of life disappeared. Statistics made state control simpler by rendering individuals as ciphers. Ideas and concepts got reinterpreted and reduced to numbers and displayed as data points in primitive bar graphs and charts. Reality altered radically, taking its first step toward the virtual. We begin to see the contemporary version of the via media, not as the median way, but indeed as a foretaste of that William Gates inspired life of total technological mediation, in which the haptic life recedes farther and farther into the distance.

In one of his more persuasive books, Discipline and Punish, Michel Foucault points out subtle changes in methods and practices of surveillance, discipline and constraint in the 19th century, that is, in the wholesale implementation of new strategies of social control. What he does not mention is that the reduction of people to integers and ciphers, in the preceding century, made such a shift possible. We can describe that new drive toward incarceration as the after-math of a major shift from the 18th-century view of the world as a teeming Vanity Fair to something measurable, predictable and, of ultimate importance to those in authority, as absolutely controllable. In this new, normalizing configuration, there must be those who society defines and categorizes as uncontrollable, as abnormal even. The normal can only find its way against these aberrant men and women, whom the state must, in one way or another, institutionalize. Here, the story of the average takes a decisive turn.

Foucault confronted a period dominated by the desire of natural philosophers and scientists to classify, order, and rank every living and non-living thing in the universe. One can find evidence of this new ordering everywhere. Linneaus’s earlier attempts at botanical taxonomies, for instance, find a welcome audience in the 19th century. The brothers Grimm, trained as philologists, develop an elaborate genealogy of the world’s languages, and rank them from the most pure (Hebrew and Aramaic) to the most corrupt (English). A British philologist, Furnival Child, along with others, sorts and orders (according to theme) the entirety of English ballads and folktales. The Grimms do the same for fairy tales. In 1891, Dimitrii Mendeleev provides the scientific basis for the Periodic Chart. Species, genus and phylum give shape and everlasting order to the animal kingdom. Darwin of course does his own ordering of the animal kingdom.

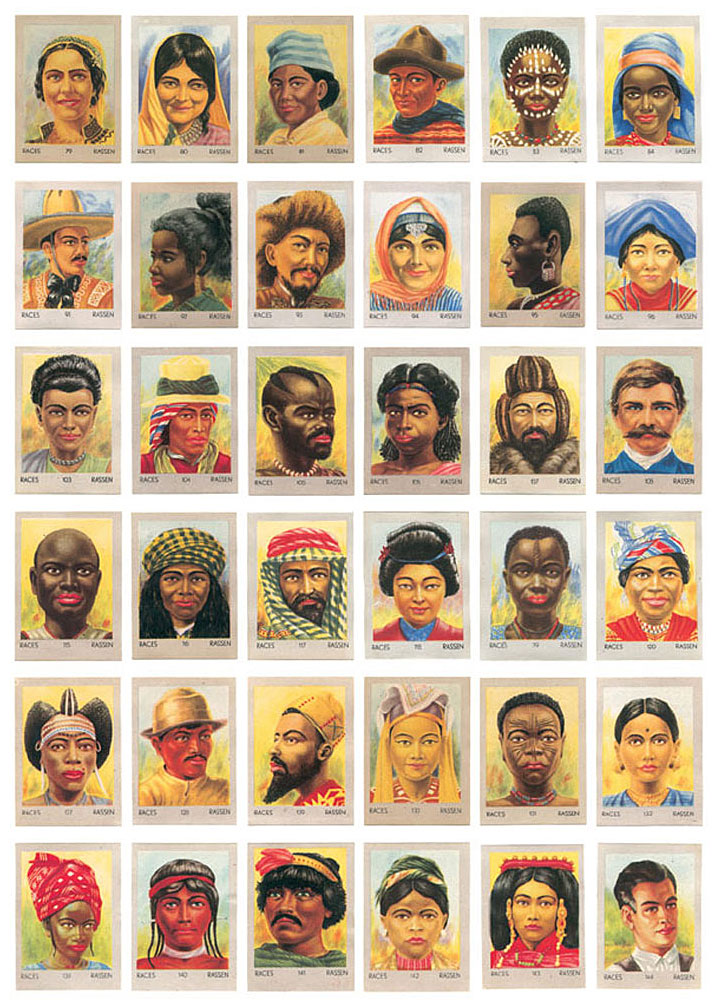

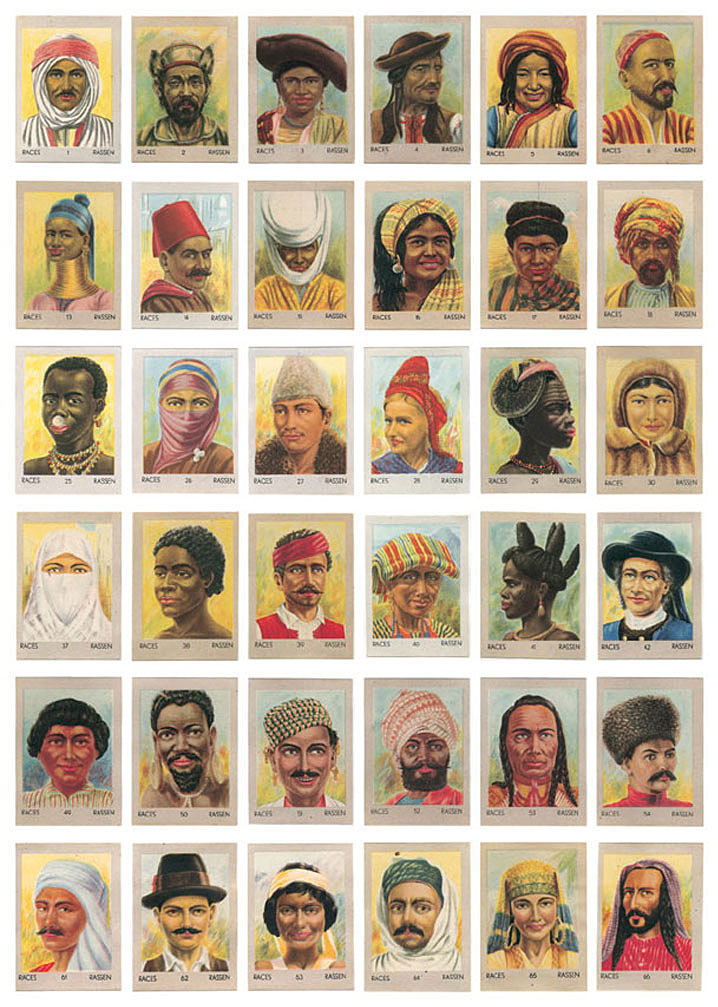

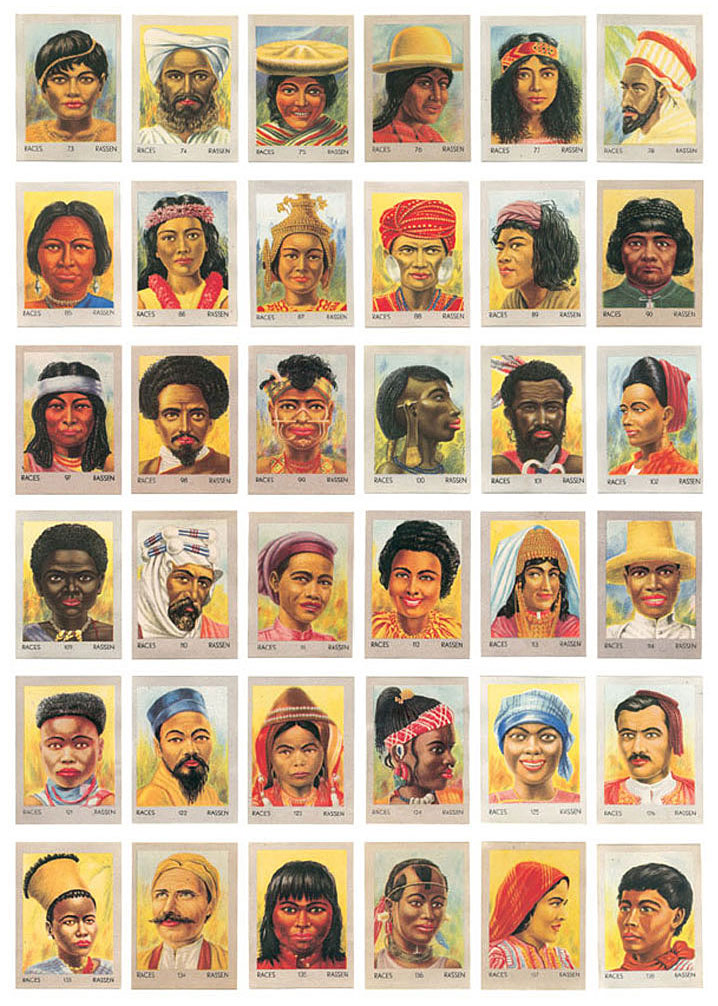

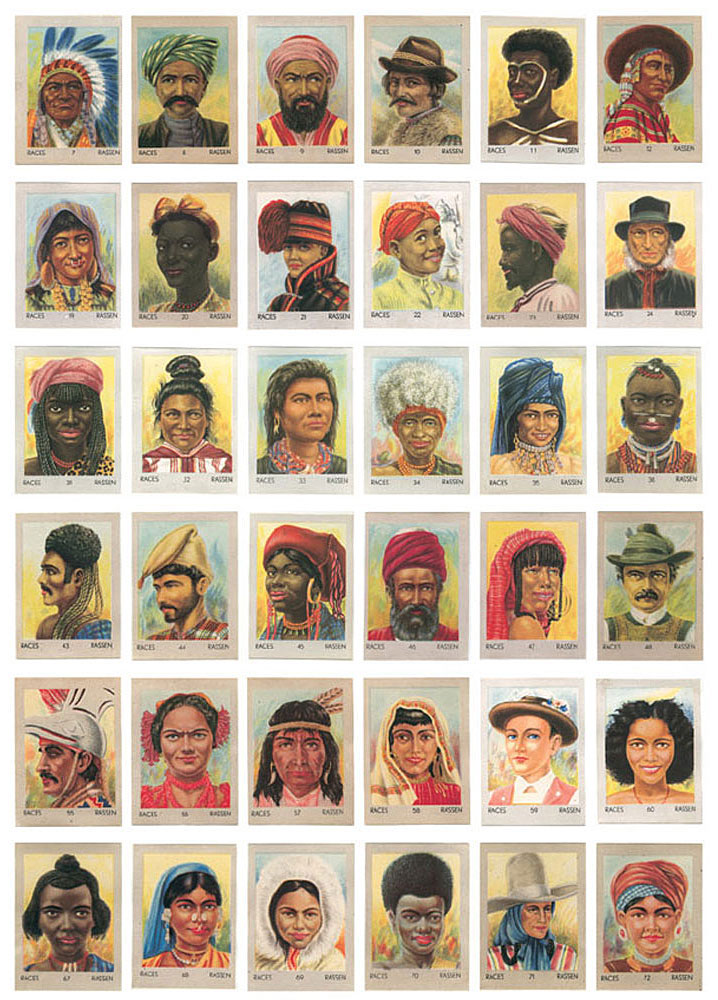

The most insidious form of ranking and ordering stems from a scientific idea that J.F. Blumenbach, a German anatomist, had proposed as early as in 1775, the reduction of the world’s people into five racial groups: Caucasians, Mongolians, Malays, American Indians, and Ethiopians. Nineteenth-century anatomists and anthropologists began to rank those races from the massively superior—Caucasians—to the hopelessly inferior—Ethiopians. Samuel Morton, an American anthropologist who worked in the late 19th century, provided the notion of racial inferiority with so-called scientific proof. He measured the cranial capacity of over 1,000 skulls that he had collected from “every quarter of the world.” Correlating skull size with brain size and thus with intelligence, Morton published his results in 1839 in a volume titled Crania Americana: By a magnitude of five or six, he claimed, Caucasians possessed the largest skulls, followed by Mongolians, Malays, American Indians; far down on the bottom, with very small brains, remained the Ethiopians.

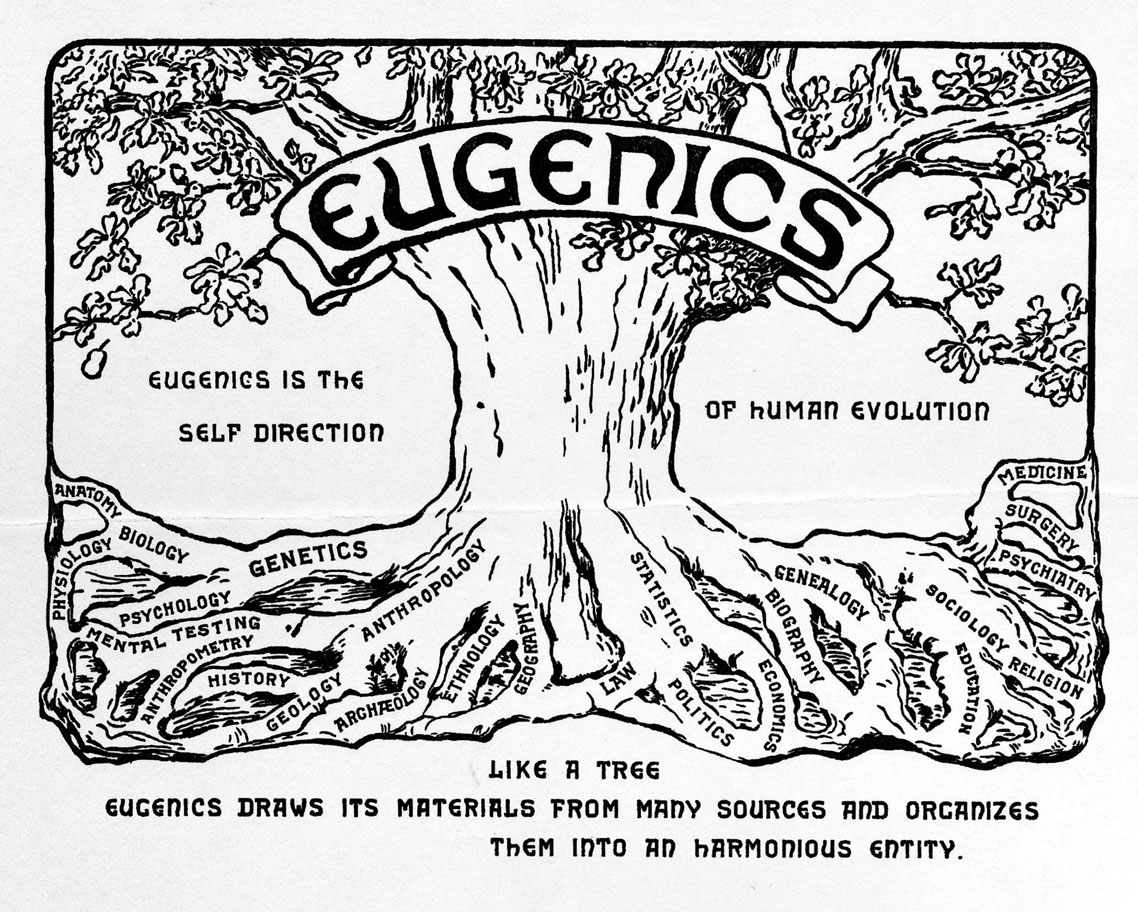

Aside from Herbert Spencer and his application of Darwin to social issues, Darwin’s cousin, Francis Galton, deserves the title of the period’s leading scientific racist. From a contemporary Belgian statistician, Adolphe Quetelet, Galton borrowed the idea of grouping data along a bell-shaped curve. In Quetelet’s science, which he termed social mechanics, one could determine the average of any physical or intellectual property in an entire population. Galton, however, used the “normal curve” only insofar as it helped to locate and define the dismally inferior.

While Quetelet had used the curve for portraying an array of astronomical errors, Galton used it to make his theories of human inferiority graphically clear. If one were to measure any aspect of a given population—height, weight, sex—roughly half the population would arrange themselves around the middle of the bell. Galton referred to these people, in a new, mediocre sense, as the average. The truly superior ones—the geniuses, the wealthy, the highborn, the mighty few—gathered at one end of the bell curve, singular and virtually untouchable, a firewall between them and roughly 90% of the rest of the population. At the other end were the inferior ones—the deviants and criminals of all sorts. Here, too, is where Galton placed all the black folks in a population with all those small brains that Morton had measured.

But as if that news were not bad enough for blacks and other disenfranchised groups, Galton added yet another layer. In his new science of eugenics (modern genetics), once a person found himself or herself at the dismal end of, say, the intelligence array, he or she could rise only slightly, if at all, for according to Galton, mental acuity was inborn. A person’s genes condemned him or her to a near-permanent place on the bell curve. Psychometrists coined new words to describe the congenitally dull-witted: idiot, moron and imbecile. In the 19th-century forensic tool called the line-up, these people become the “usual suspects,” a tactic on which the criminal justice system so clumsily and so blindly depends.

Recall that Galton’s ideas of innate traits came at a time when both England and America were becoming more and more class-conscious, when a person entered the upper class only through right of birth. One could not move from, say, the middle to the upper class in incremental steps through hard work and diligence. Excellence of character meant little. As people searched for ways out of their locked-in plights, they created phrases that revealed their desperation. People prayed “to strike it rich,” hoped for a “windfall,” strove to become “self-made men or women” or, in an image of logical impossibility, struggled “to pull themselves up by their own bootstraps.” The average citizen wanted to move higher and higher—to be, in the language of modern sociology, upwardly mobile.

Such aspirations came to a halt for many young people in the 1960s. The majority of activists in the 60s willfully placed themselves at the cast-off end of the bell curve. They vacated the average, the normal, and linked arms with blacks and so-called deviants. They took their political direction, left, seriously and headed in the opposite direction from their parents and grandparents. Indeed, whoever felt average—just average—could be reborn at the inferior end of the spectrum as a politico, an activist, and even a revolutionary. The 60s blew up the bell curve. Like African-Americans, who took the power and sting out of the word nigger by seizing it back as their own, so the New Left co-opted the dreaded tail of the curve and adopted it as their own.

Very little for them would be normal anymore—at least the word normal would not describe or define anything in their lives. For, after all, who could call the Vietnam War normal? Or racism? Or homophobia? College curricula, the safeguard of all that was normal, got hit particularly hard. (We might note that only recently have we seen a lull in the fighting in the so-called culture wars, although the Bush administration has picked up the cudgel again.) The canon—the inventory of normal and accepted literature—flew wildly apart in the mid-to-late 60s. Academics had revised the canon many times. But here was something new—the displacement of the white, European tradition by the addition of black literature, Eastern European fiction, minority poetry, gay and lesbian memoirs, and on and on. In this new configuration, the canon embraced the world in general, from first-world favorites to third-world upstarts. Professors would no longer hand out a normal syllabus; they would teach no normal classes. In a shocking New York Review of Books essay in l967, Noam Chomsky announced that, until the conclusion of the war, he would devote all his scholarly writing to a critique of Vietnam and American foreign policy. This from a man who had revolutionized the discipline of linguistics. Life—and academic life with it—moved to the edge. At times it even tipped over—fell off the charts, moved off the curve.

The New Left realized—and the idea worked itself throughout the university—that standardized testing and its standard deviations and the resulting graceful curve, all of it supposedly predicated on finding the center and the norm, rested on 19th-century racist assumptions. Over its many years of implementation, the SAT effectively eliminated the bulk of minority applicants to college. Who the hell was going to tell us, the left said, what was normal—certainly no one in this imperial, racist culture. One could not have solidarity with some trumped up, arithmetically derived, average. Get rid of the center, get rid of tests, get rid of grades altogether.

To aver, to speak the truth, helped to isolate the idea of the average and its pesky friend the normal. A phrase like “the average American” has been relegated to the domain of the politician. In the early days of his presidency, one heard the mantra from George W. Bush that the average American could expect a refund of $300 from the federal government after passage of his tax cuts.[1] Of course, that average American did not exist—at least not in any great numbers—and only when some statistician in the office of the federal budget mingled the grand returns of the wealthy with the pittance doled out to the poor.

And average’s buddy, the normal? Well, that word, too, has its own quirky history. Normal derives from the name of a medieval tool for making right angles—a norma. By the 19th century, the word became synonymous with the average and, by extension of its original meaning, came to describe posture: one’s ability to stand at a perfect 90 degrees to the ground—ramrod straight. Schools for teaching teachers in this country were called normal schools because, among other things, they taught the importance of perfect posture. Just as one could tell a criminal in the 19th century from his or her physiognomy—“low brows” are all thieves and crooks—one could instantly see moral rectitude reflected in proper posture. This is what it meant to be upright and true, as a normal, average American citizen. People who stand up straight are inclined to do good.

Ah yes, things have relaxed a bit. At least now the idea of the average seems slightly worn out or left standing off in the corner. And we know from Mr. Yeats that when the center loses its grip, things will certainly fall apart. In this post-postmodern moment, we can all feel free to slouch our own individual and eccentric selves towards Bethlehem. We must have hope. For who can tell what is waiting to be born?

- According to “The Ultimate Burden of the Tax Cuts,” an article by William Gale, Peter Orszag and Isaac Shapiro available through the Center on Budget and Policy Priorities, “the average tax cut” for the bottom 20 percent of the taxpayers was $l9. “The average tax cut” for the middle 20 percent was $652. And “the average tax cut” for the over one-million-dollar category of taxpayer was $136,398. The article is available at www.cbpp.org/archives/6-2-04tax.htm.

Barry Sanders is professor of the history of ideas at Pitzer College, of the Claremont Colleges. His last book, Alienable Rights: The Exclusion of African Americans in a White Man’s Land, 1619–2000, co-written with Francis Adams, was nominated for the Pulitzer Prize and the Robert Kennedy Book Award.